Could UK build a national large language AI model to power tools like ChatGPT?

The UK urgently needs to develop its own artificial intelligence large language model (LLM) to allow its start-ups, scale-ups and enterprise companies to compete with rivals in China and the US on AI and data, a senior BT executive told MPs earlier today.

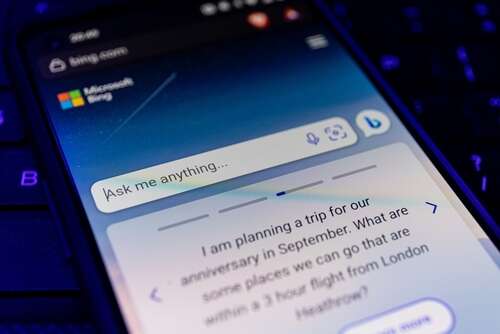

Large language and other foundation AI models power tools like the ChatGPT chatbot from OpenAI, as well as image generators and other generative AI applications, including in the health and material sciences field. The largest models today are owned by big tech companies such as Google, Meta and Microsoft through its partnership with OpenAI, but they are also being developed at a national level in China and the US.

To better understand how the UK should regulate, promote and invest in artificial intelligence technology, the House of Commons Science and Technology select committee is holding an inquiry entitled Governance of artificial intelligence, and today heard from Microsoft, Google and BT executives, with BT’s chief data and AI office Adrian Joseph declaring that the UK was in an “AI arms race”. He said the country could be left behind without the right investment and government direction.

“We have been in an arms race for a long time,” he told MPs. “Big tech companies have been buying start-ups and investing in their own expertise for ten if not 20 years now.” He added that the UK is competing not just with US companies but also Chinese companies like Baidu, Tencent and Alibaba which have the scale to roll-out large models quickly.

Joseph, who also sits on the UK AI Council, said: “I strongly suggest the UK should have its own national investment in large language models and we think that is really important. There is a risk that we in the UK will lose out to the large tech companies and possibly China. There is a real risk that unless we leverage and invest, and encourage our start-up companies, we in the UK will be left behind with risks in cybersecurity, healthcare and everywhere else.“

The UK is currently ranked third in the Global AI Index and the government has announced plans to capitalise on this momentum, turning the country into an AI global superpower within the decade. But Joseph warned that without proper investment that plan would be put at risk.

Need for home-grown UK large language model

A number of UK AI companies have been sold to US and European rivals in recent years including DeepMind, the neural network research company founded out of University College London in 2010. It was acquired by Google in 2014 and has one of the largest large language models developed to date. called Gopher.

Dame Wendy Hall, regius professor of computer science at the University of Southampton also appeared before the panel of MPs and seconded the urgent need for better sovereignty over large language models and AI technology, particularly when used on NHS data. “We are at the beginning of the beginning with AI, even after all of the years it has been around, we need to keep our foot on the accelerator or risk falling behind,” Dame Hall said.

Content from our partners

The role of modern ERP in transforming the distribution and logistics sector

How designers are leveraging tech to apply the brakes to fast fashion

Why the tech sector must embrace faster, smarter talent recruitment

She urged the government to get behind proposals for a sovereign large language model and the competitive capacity necessary to make it a reality and accessible to academia and start-ups alike. “It needs the UK government to get behind it, not in terms of the money as the money is out there but as a facilitator,” she explained, warning that without government support the UK would fall behind and lose out like it has with the cloud, ceding control to large US companies.

View all newsletters Sign up to our newsletters Data, insights and analysis delivered to you By The Tech Monitor team

“What we see with ChatGPT and the biases and things it gets wrong, it is based on searches across the internet,” Dame Hall continued. “When you think about taking generative AI and applying it to NHS data – data that we can trust – that is going to be incredibly powerful. Do we want to be reliant on technology from outside the UK for something that can be so powerful?”

The UK wouldn’t be the first country to consider the need for a sovereign large language model, particularly with regard to nationally sensitive or valuable data. Scale AI is a large language model designed for US national security, intelligence and operations used by the army, air force and contractors. China also has Wu Dao, a massive language model built by the government-backed Beijing Academy of AI.

“We have to be more ambitious rather than less. There is a sense of feeling we’ve done AI. This is just the very beginning of the beginning. We need to build on what we’ve done, make it better, world-leading and focus on sovereign capability,” Dame Wendy declared at the end of the session.

UK AI needs ‘light touch’ regulation

As well as a heavy focus on the need for a national large language model, the MPs also questioned the experts on the need for regulation of AI and how it should be approached, with all saying it needs to be focused on end use rather than development.

Hugh Milward, general manager for corporate, external and legal affairs at Microsoft UK said AI is a general-purpose technology and from a regulatory perspective it is best to focus on the final use case rather than how it is built, trained and developed.

“If we regulate its use then that AI, in its use in the UK, has to abide by a set of principles,” he said. “If we have no way to regulate the development of the AI and its use in China, we can regulate how it is used in the UK. It allows us to worry less about where it is developed and worry more about how it is used irrespective of where it is developed.”

He gave the example of a dual-use technology like facial recognition. It could be used to recognise a cancer in a scan, find a missing child, identify a military target or by a despotic regime to find unlicensed citizens. “Those are the same technology used for very different purposes and if we restrict the technology itself we wouldn’t get the benefits from the cancer scans in order to solve for the problem with its use in a surveillance society,” he said.

James Gill, partner and co-head of law firm Lewis Silkin’s digital, commerce and creative team watched the session for Tech Monitor and said regulation in this space is “very much a movable feast”. He explained: “AI has huge potential as a problem-solving tool, including to address major socio-economic and environmental challenges. As ever with disruptive technology, the challenge will be to develop a regulatory position which provides sufficient protections for safe usage while not stymying progress and innovation.

“As the committee heard, the general regulatory environment for digital technology is much more developed now than at, say, the outset of the Web 2.0 revolution – and the law needs to keep abreast of technological advancements.?Savvy businesses wishing to develop or deploy AI will now be planning ahead to understand the implications of the emerging regulatory framework.”

Read more: Microsoft takes ‘multi-billion dollar’ stake in ChatGPT maker OpenAI

Topics in this article : AI , Regulation